Large language models (LLMs) are powerful but fundamentally static tools. Once trained, they don’t continue learning or adapt based on new information unless they’re fine-tuned—a costly and complex process. They also don’t retain memory between sessions. Each interaction starts with the same frozen knowledge base and no awareness of anything that came before. This creates a gap between what we want from these models—dynamic, personalized, up-to-date responses—and what they’re actually capable of out of the box.

To work around these limitations, we use techniques that shape the model’s behavior during inference. One of the most effective of these is In-Context Learning.

In-context learning refers to a model’s ability to adapt its behavior based on the user’s prompt, and there are several techniques. Below, we will look at a few common methods: zero-shot, one-shot, few-shot, and many-shot prompting.

Zero-shot Learning

Without any further instructions, a model will respond based only on how it was trained, known as zero-shot learning. An example might be asking a model to evaluate several customer reviews without any additional instructions.

One-shot Learning

In the case of one-shot learning, we could provide the model with our source data and a single example of how we expect the output, such as showing the model that we wish to have the customer’s name, a colon, and then a determination of whether the review was positive or negative.

Few-shot Learning

One-shot learning may not be sufficient to ensure consistent results, so more often we will wish to utilize few-shot learning, where we provide several examples which the model can more easily and accurately conceptualize. In this case, we may list several examples with our source data.

Many-shot Learning

Finally, for more precision or with more complex tasks, we can utilize many-shot, which simply means we provide even more examples of expected output.

Demonstrating Few-shot Learning

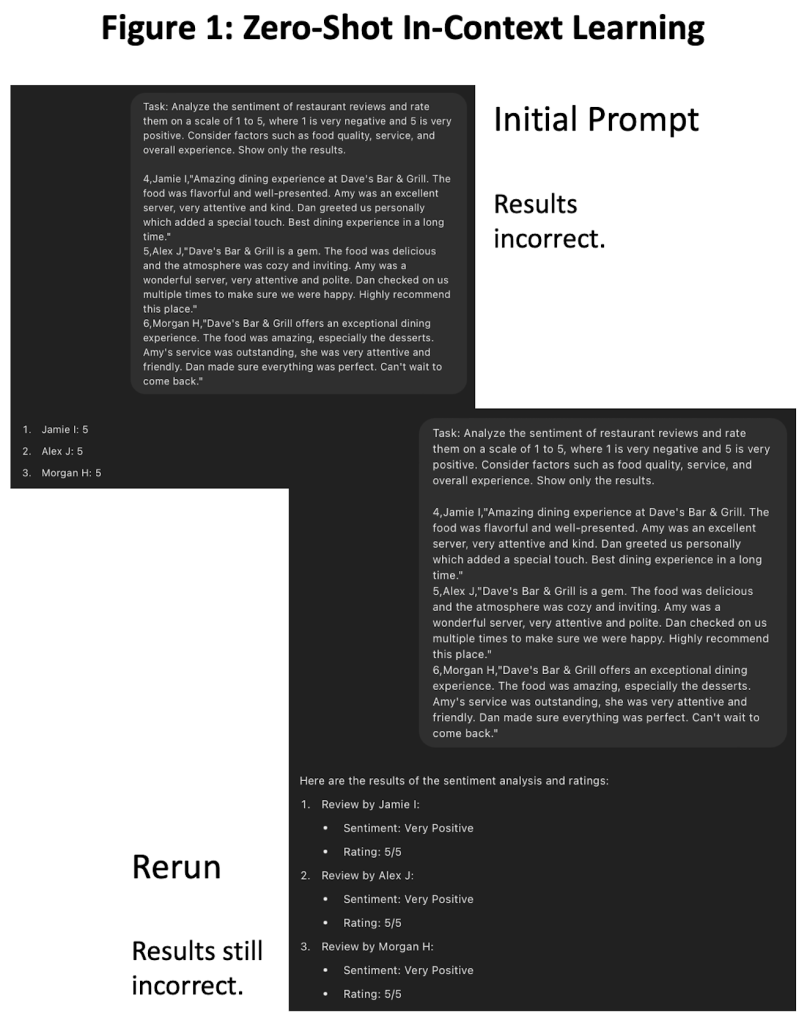

Let’s use an example of sentiment analysis to demonstrate few-shot learning. Suppose we want to score restaurant reviews from 1-5 and need to keep the exact format but with an added field. We give ChatGPT a basic instruction and our data without any additional information (zero-shot). Depending on how ChatGPT is trained, it may not deliver the results we expect (see figure 1).

Now, along with the prompt, let’s show ChatGPT both the source data and the results we expect as an example and then provide the new data for the model to continue with the same function and format (see figure 2). Now, the model understands exactly what we want and can duplicate the format.

As you can see, even though we can’t permanently retrain a static model through inference, we can provide additional guidance through example to assist models in understanding what we expect, all without the complexity and expense of fine-tuning.

Leave a comment